-

shutterstock.com©NicoEINino

05.10.2023Deliberately biased

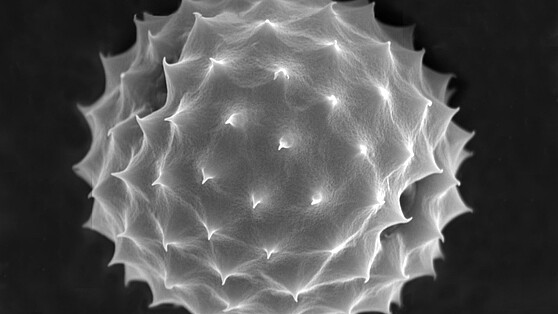

How does biased training data affect artificial intelligence responses? This is what researchers at Humboldt-Universität zu Berlin are investigating in the project "OpinionGPT". Using a browser, anyone can now test the deliberately tendentious AI language model for themselves.

How can we stop climate change? “I think the best way to stop climate change is to stop burning fossil fuels,” replies the imaginary person from Germany. “We can't do that. It's a natural cycle," is the opinion from the USA. And the Asian version is: "I think the only way to stop climate change is to stop being human." Three different answers to one and the same question. And each one reflects a different attitude. The answers were generated using the AI language model “OpinionGPT”.

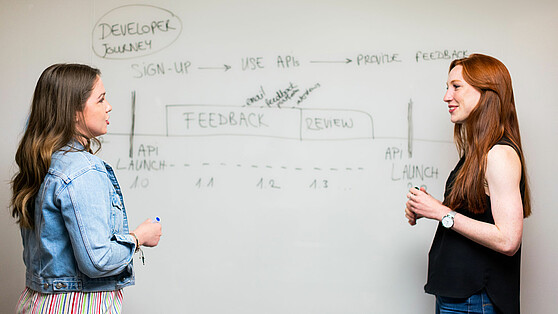

In the project of the same name, a group of researchers at the Chair of Machine Learning at the Institute of Computer Science at Humboldt-Universität zu Berlin (HU Berlin) is investigating how biases about the training data fed in affect the responses of an AI model. To do this, the researchers identified eleven different demographic groups, which were assigned to four dimensions: Gender (male/female), age (teenagers, people over 30, pensioners), origin (Germany, America, Latin America, Middle East) and political orientation (left-wing or right-wing). A training corpus of question-answer pairs was then created for each dimension along with its demographic subgroups. The answers were written by people who belonged to the respective grouping.

The objective of the project is to develop a language model that specifically maps prejudices. A browser-based online demo makes the effect of biased training data on model responses transparent. Users can enter questions and compare the AI-generated, juxtaposed model answers from different demographic groups. OpinionGPT enables researchers to investigate the emergence and spread of prejudice in a controlled environment. At the same time, the project takes a critical approach, highlighting how artificial intelligence can reinforce stereotypes and contribute to the spread of disinformation.

And what's next for OpinionGPT? The researchers at the Machine Learning Chair at HU Berlin want to further improve the model. Among other things, the "biases" in the questions and answers are to be modelled in a more differentiated way. In addition, other scientists should have direct access to the model answers via an API interface. (vdo)

More Stories

-

Startup Facts & Events

© Berlin Partner - eventfotografen.berlin

Startup Facts & EventsExcellence meets entrepreneurial spirit: Science & Startups strengthens Berlin’s position in Europe.→

© Berlin Partner - eventfotografen.berlin

Startup Facts & EventsExcellence meets entrepreneurial spirit: Science & Startups strengthens Berlin’s position in Europe.→Excellence meets entrepreneurial spirit: Science & Startups strengthens Berlin’s position in Europe

-

Facts & Events

© Charité | Arne Sattler

Facts & EventsAccelerating medical innovation: New ARC Center bundles expertise.→

© Charité | Arne Sattler

Facts & EventsAccelerating medical innovation: New ARC Center bundles expertise.→Accelerating medical innovation: New ARC Center bundles expertise

-

Talent Facts & Events

© WISTA Management GmbH

Talent Facts & EventsAdlershof Dissertation Award 2025 goes to early-career researcher Dr Sascha Robert Gaudlitz.→

© WISTA Management GmbH

Talent Facts & EventsAdlershof Dissertation Award 2025 goes to early-career researcher Dr Sascha Robert Gaudlitz.→Adlershof Dissertation Award 2025 goes to early-career researcher Dr Sascha Robert Gaudlitz

-

Facts & Events

© Berlin Partner. Copyright: Wüstenhagen

Facts & EventsWorld Cancer Day 2026: How research from Berlin is strengthening women’s health.→

© Berlin Partner. Copyright: Wüstenhagen

Facts & EventsWorld Cancer Day 2026: How research from Berlin is strengthening women’s health.→World Cancer Day 2026: How research from Berlin is strengthening women’s health

-

Facts & Events

Image by Fionn Grosse via Unsplash

Facts & EventsUrban Development Congress 2026: What visions are shaping the cities of tomorrow?→

Image by Fionn Grosse via Unsplash

Facts & EventsUrban Development Congress 2026: What visions are shaping the cities of tomorrow?→Urban Development Congress 2026: What visions are shaping the cities of tomorrow?

-

Facts & Events

© Christop Sapp (denXte)

Facts & EventsProf. Dr. Barbara Vetter from the FU Berlin and Prof. Dr. Klaus-Robert Müller from the TU Berlin receive Germany's most prestigious research funding…→

© Christop Sapp (denXte)

Facts & EventsProf. Dr. Barbara Vetter from the FU Berlin and Prof. Dr. Klaus-Robert Müller from the TU Berlin receive Germany's most prestigious research funding…→Leibniz Prizes for Philosopher and Computer Scientist from Berlin

-

Facts & Events Transfer – Stories

© Agentur Medienlabor / Stefan Schubert

Facts & Events Transfer – StoriesFive companies from the capital region were honoured for their visionary ideas and products, and another received a special award.→

© Agentur Medienlabor / Stefan Schubert

Facts & Events Transfer – StoriesFive companies from the capital region were honoured for their visionary ideas and products, and another received a special award.→Berlin Brandenburg Innovation Award 2025 – The Winners

-

Facts & Events

© Berlin Partner / Wüstenhagen

Facts & EventsFrom 24 to 28 November 2025, cooperation between science and industry will once again be the focus of Transfer Week Berlin-Brandenburg.→

© Berlin Partner / Wüstenhagen

Facts & EventsFrom 24 to 28 November 2025, cooperation between science and industry will once again be the focus of Transfer Week Berlin-Brandenburg.→Transfer Week Berlin-Brandenburg 2025

-

Facts & Events

© Berlin Partner / Elvina Kulinicenko

Facts & EventsIn October/November, construction began on three prominent research buildings in Berlin, and another was officially opened. Here’s an overview.→

© Berlin Partner / Elvina Kulinicenko

Facts & EventsIn October/November, construction began on three prominent research buildings in Berlin, and another was officially opened. Here’s an overview.→Showcases, Hubs and Innovation Platforms: What’s new in Brain City Berlin?

-

Facts & Events

Berlin Science Week 2025 © Design: Bjoern Wolf / Graphic: Martin Naumann

Facts & EventsFrom 1 to 10 November, Berlin Science Week once again invites you to explore Brain City Berlin. This year’s motto “BEYOND NOW”.→

Berlin Science Week 2025 © Design: Bjoern Wolf / Graphic: Martin Naumann

Facts & EventsFrom 1 to 10 November, Berlin Science Week once again invites you to explore Brain City Berlin. This year’s motto “BEYOND NOW”.→Opening new perspectives: Berlin Science Week 2025

-

Facts & Events

© Berlin University Alliance

Facts & EventsStarting October 10, 2025, the exhibition “On Water. WasserWissen in Berlin” at the Humboldt Laboratory will present entirely new perspectives on the…→

© Berlin University Alliance

Facts & EventsStarting October 10, 2025, the exhibition “On Water. WasserWissen in Berlin” at the Humboldt Laboratory will present entirely new perspectives on the…→“On Water”: Rethinking Water

-

Facts & Events Transfer – Stories

© Christian Kielmann

Facts & Events Transfer – StoriesEurope’s largest laboratory infrastructure for transfer teams in the field of Green Chemistry is being built on the campus of TU Berlin. The “Chemical…→

© Christian Kielmann

Facts & Events Transfer – StoriesEurope’s largest laboratory infrastructure for transfer teams in the field of Green Chemistry is being built on the campus of TU Berlin. The “Chemical…→Construction Kick-off: „Chemical Invention Factory“

-

Insights Facts & Events

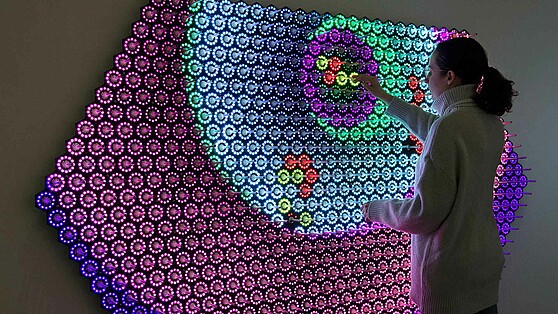

© Matters of Activity / HU Berlin

Insights Facts & EventsOn 19 September, the Cluster of Excellence ‘Matters of Activity’ will hold its final annual conference. Brain City interview with Dr Christian Stein.→

© Matters of Activity / HU Berlin

Insights Facts & EventsOn 19 September, the Cluster of Excellence ‘Matters of Activity’ will hold its final annual conference. Brain City interview with Dr Christian Stein.→“We bring the material to eye level”

-

Facts & Events

© Graziela Diez

Facts & EventsWith her project "Data Worker's Inquiry", the Berlin-based sociologist and computer scientist draws attention to exploitative conditions in AI labour.…→

© Graziela Diez

Facts & EventsWith her project "Data Worker's Inquiry", the Berlin-based sociologist and computer scientist draws attention to exploitative conditions in AI labour.…→Dr. Milagros Miceli Named to TIME 100 List of the Most Influential People in AI

-

Facts & Events Transfer – Stories

© TU Berlin / allefarben-foto

Facts & Events Transfer – StoriesDrone logistics, recycling of building material and wastewater reuse: these ideas are to be tested in so-called “Reallaboren” (Real-World…→

© TU Berlin / allefarben-foto

Facts & Events Transfer – StoriesDrone logistics, recycling of building material and wastewater reuse: these ideas are to be tested in so-called “Reallaboren” (Real-World…→Three Real-World Laboratories are being Launched in Berlin

-

Facts & Events

© mfn / Carola Radke

Facts & EventsOn 6 October, the mit:forschen! team invites you to the first Campus Citizen Science event at the Museum für Naturkunde Berlin.→

© mfn / Carola Radke

Facts & EventsOn 6 October, the mit:forschen! team invites you to the first Campus Citizen Science event at the Museum für Naturkunde Berlin.→Campus Citizen Science: Artificial Intelligence

-

Facts & Events Transfer – Stories

© Stefan Klenke / HU Berlin

Facts & Events Transfer – StoriesBerlin-based battery researcher Prof. Dr. Philipp Adelhelm has been awarded the 2024 Berlin Science Award. Prof. Dr. Inka Mai from TU Berlin received…→

© Stefan Klenke / HU Berlin

Facts & Events Transfer – StoriesBerlin-based battery researcher Prof. Dr. Philipp Adelhelm has been awarded the 2024 Berlin Science Award. Prof. Dr. Inka Mai from TU Berlin received…→Prof. Dr. Philipp Adelhelm honored with Berlin Science Award

-

Insights Facts & Events

© Ernestine von der Osten-Sacken

Insights Facts & EventsIt's all about future food production: in the CUBES Circle project, scientists are researching how established agricultural production systems can be…→

© Ernestine von der Osten-Sacken

Insights Facts & EventsIt's all about future food production: in the CUBES Circle project, scientists are researching how established agricultural production systems can be…→Tomatoes and fish in a zero-waste cycle

-

Facts & Events

![© BHT / Simone M. Neumann [Translate to en:] Humanoider Roboter vor einer Tafel mit geometrischen Elementen](/fileadmin/_processed_/0/0/csm_1920x1080_Labor_Humanoide_Robotik_c_Simone-M-Neumann_BHT_cc88f10dd5.jpg) © BHT / Simone M. Neumann

Facts & EventsHere are three exciting updates where our Brain City Ambassadors play a key role.→

© BHT / Simone M. Neumann

Facts & EventsHere are three exciting updates where our Brain City Ambassadors play a key role.→UNITE is on its way - and some more news

-

Facts & Events

© Pelin Asa, Matters of Activity / Max Planck Institute of Colloids and Interfaces

Facts & EventsThe exhibitions “Symbiotic Wood” and “Swamp Things!” by the Cluster of Excellence “Matters of Activity” look at beetle damage, fungal infestation and…→

© Pelin Asa, Matters of Activity / Max Planck Institute of Colloids and Interfaces

Facts & EventsThe exhibitions “Symbiotic Wood” and “Swamp Things!” by the Cluster of Excellence “Matters of Activity” look at beetle damage, fungal infestation and…→Inspired by beetles and moorland plants

-

Facts & Events

![© LNDW [Translate to en:] Motiv LNDW 2025, Brain City Berlin](/fileadmin/_processed_/6/5/csm_1920x1080_lndw25_neutral-2_c79195d6c2.jpg) © LNDW

Facts & EventsThe LNDW is celebrating its anniversary this year. The programme includes more than 1,000 events. Tickets are available at a special anniversary…→

© LNDW

Facts & EventsThe LNDW is celebrating its anniversary this year. The programme includes more than 1,000 events. Tickets are available at a special anniversary…→25 years of Long Night of Science

-

Facts & Events

© Berlin University Alliance / Matthias Heyde

Facts & EventsThe decision has been made: Brain City Berlin is entering the next round of Excellence funding from the Federal and State governments with five…→

© Berlin University Alliance / Matthias Heyde

Facts & EventsThe decision has been made: Brain City Berlin is entering the next round of Excellence funding from the Federal and State governments with five…→Excellence strategy: Berlin to participate with 5 clusters

-

Facts & Events

© Ilja C Hendel / Wissenschaft im Dialog, CC BY-SA 4.0

Facts & EventsIt's that time again: the exhibition ship once again is sailing on German waters. 29 cities are on the programme this year.→

© Ilja C Hendel / Wissenschaft im Dialog, CC BY-SA 4.0

Facts & EventsIt's that time again: the exhibition ship once again is sailing on German waters. 29 cities are on the programme this year.→Ahoi! MS Wissenschaft on Tour 2025

-

Facts & Events Transfer – Stories

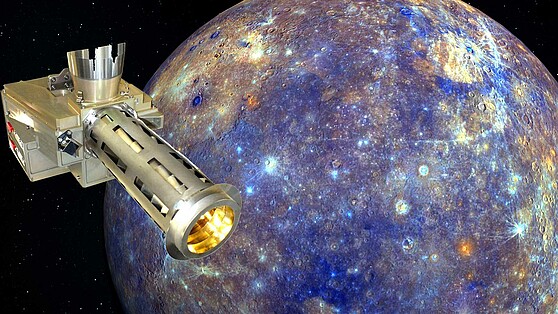

© DLR (CC BY-NC-ND 3.0)

Facts & Events Transfer – StoriesWith the newly founded institute, the German Aerospace Center is pooling its expertise in the field of space instruments and space research in Brain…→

© DLR (CC BY-NC-ND 3.0)

Facts & Events Transfer – StoriesWith the newly founded institute, the German Aerospace Center is pooling its expertise in the field of space instruments and space research in Brain…→New DLR Institute of Space Research

-

Facts & Events

© TU Berlin / Ulrich Dahl

Facts & EventsTogether with Berlin Universities Publishing (BerlinUP), Technische Universität Berlin is actively involved in setting up the national Service centre…→

© TU Berlin / Ulrich Dahl

Facts & EventsTogether with Berlin Universities Publishing (BerlinUP), Technische Universität Berlin is actively involved in setting up the national Service centre…→Strengthening open science

-

Facts & Events

© Agentur Medienlabor / Stefan Schubert

Facts & EventsIdeas for innovative products, concepts and solutions are sought that exemplify the innovative power and economic strength of the capital region.→

© Agentur Medienlabor / Stefan Schubert

Facts & EventsIdeas for innovative products, concepts and solutions are sought that exemplify the innovative power and economic strength of the capital region.→

Berlin Brandenburg Innovation Award 2025: Apply by July 14!

-

Facts & Events

© Frank Richtersmeier

Facts & EventsAn Easter walk can easily be combined with a good cause. Here are our Citizen Science favourites.→

© Frank Richtersmeier

Facts & EventsAn Easter walk can easily be combined with a good cause. Here are our Citizen Science favourites.→Master Hare and Butterflies – Explore Nature at Easter!

-

Facts & Events

© Robin Baumgarten

Facts & EventsOn April 14, Urania Berlin celebrates World Quantum Day - and 100 years of quantum physics. The event is hosted by the German Physical Society (DPG).→

© Robin Baumgarten

Facts & EventsOn April 14, Urania Berlin celebrates World Quantum Day - and 100 years of quantum physics. The event is hosted by the German Physical Society (DPG).→World Quantum Day 2025: Quantum Research for Everyone

-

Facts & Events

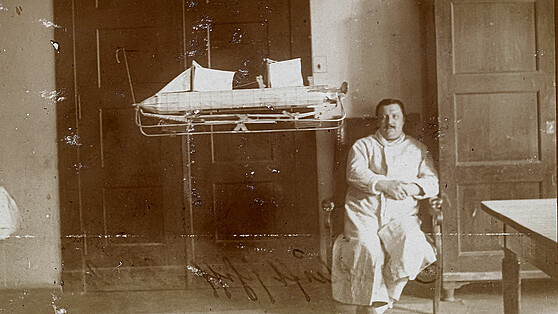

© Institute for the History of Medicine and Medical Ethics, Charité (IGM-K-HPAC 3086-1908)

Facts & EventsIn the exhibition “Inventing-Mania!”, the Berlin Museum of Medical History tells the story of “Engineer von Tarden” and his sailing airship.→

© Institute for the History of Medicine and Medical Ethics, Charité (IGM-K-HPAC 3086-1908)

Facts & EventsIn the exhibition “Inventing-Mania!”, the Berlin Museum of Medical History tells the story of “Engineer von Tarden” and his sailing airship.→Inventing-Mania – and the Dream of Flying

-

Facts & Events

Prof. Dr. Volker Haucke: FMP © Silke Oßwald; Prof. Dr. Ana Prombo: MDC © Pablo Castagnola

Facts & EventsProf. Dr. Ana Pombo (MDC) and Prof. Dr. Volker Haucke (FMP), have been awarded the Gottfried Wilhelm Leibniz Prize 2025.→

Prof. Dr. Volker Haucke: FMP © Silke Oßwald; Prof. Dr. Ana Prombo: MDC © Pablo Castagnola

Facts & EventsProf. Dr. Ana Pombo (MDC) and Prof. Dr. Volker Haucke (FMP), have been awarded the Gottfried Wilhelm Leibniz Prize 2025.→Leibniz Prize for 2 top Berlin researchers

-

Facts & Events Transfer – Stories

© Berlin Partner / eventfotografen.berlin

Facts & Events Transfer – StoriesOn March 4, a total of 19 universities, colleges and non-university research institutions signed the statutes of UNITE Sciences e.V.→

© Berlin Partner / eventfotografen.berlin

Facts & Events Transfer – StoriesOn March 4, a total of 19 universities, colleges and non-university research institutions signed the statutes of UNITE Sciences e.V.→UNITE Sciences: Accelerating technology transfer

-

Facts & Events

© ZAUM / Christine Weil

Facts & EventsA research group from Charité – Universitätsmedizin Berlin has developed an app that allows the pollen count in Brain City Berlin to be tracked at…→

© ZAUM / Christine Weil

Facts & EventsA research group from Charité – Universitätsmedizin Berlin has developed an app that allows the pollen count in Brain City Berlin to be tracked at…→Charité App "Pollenius": Data from the Pollen Trap

-

Facts & Events

© HTW Berlin/ Alexander Rentsch

Facts & EventsAt 51 percent, Brain City Berlin has the highest rate of first-time university graduates in Germany.→

© HTW Berlin/ Alexander Rentsch

Facts & EventsAt 51 percent, Brain City Berlin has the highest rate of first-time university graduates in Germany.→First university degrees: Berlin in top position nationwide in 2023

-

Facts & Events

© Shutterstock/Sineeho

Facts & EventsResearchers at the Max Planck Institute for Human Development conducted a meta-analysis to examine who is particularly susceptible to fake news that…→

© Shutterstock/Sineeho

Facts & EventsResearchers at the Max Planck Institute for Human Development conducted a meta-analysis to examine who is particularly susceptible to fake news that…→Fake News: Who falls for it and why?

-

Facts & Events

© UdK Berlin / Design: Ira Göller und Sophie Pischel

Facts & EventsOn 8 February, the Berlin University of the Arts will kick off its anniversary year. With interdisciplinary performances, dance, theatre,…→

© UdK Berlin / Design: Ira Göller und Sophie Pischel

Facts & EventsOn 8 February, the Berlin University of the Arts will kick off its anniversary year. With interdisciplinary performances, dance, theatre,…→50 years: UdK Berlin celebrates the diversity of its disciplines

-

Facts & Events Transfer – Stories

© IHK Berlin

Facts & Events Transfer – StoriesTU Berlin and IHK Berlin want to work closely together to promote university spin-offs and innovation in Brain City Berlin. An agreement has now been…→

© IHK Berlin

Facts & Events Transfer – StoriesTU Berlin and IHK Berlin want to work closely together to promote university spin-offs and innovation in Brain City Berlin. An agreement has now been…→Cooperation agreement between TU Berlin and IHK Berlin signed

-

Facts & Events

© Shutterstock. AI Generator

Facts & EventsDo stable relationships matter more to women or to men? A study, in which the Institute of Psychology at HU Berlin was involved in a leading role,…→

© Shutterstock. AI Generator

Facts & EventsDo stable relationships matter more to women or to men? A study, in which the Institute of Psychology at HU Berlin was involved in a leading role,…→Romantic Gender Gap

-

Facts & Events

![© LNDW/Matthias Frank [Translate to en:] Frau experimentiert mit Glaskolben auf der LNDW 2024, Brain City Berlin](/fileadmin/_processed_/9/7/csm_1920x1080_c_LNDW_Matthias_Frank_a865e182fa.jpg) © LNDW/Matthias Frank

Facts & Events200 years of Museumsinsel, 100 years of quantum science, 50 years of the Berlin University of the Arts, 25 years of LNDW: In 2025, Brain City Berlin…→

© LNDW/Matthias Frank

Facts & Events200 years of Museumsinsel, 100 years of quantum science, 50 years of the Berlin University of the Arts, 25 years of LNDW: In 2025, Brain City Berlin…→Brain City Berlin 2025: our Top 10 Events

-

Facts & Events

© Agentur Medienlabor | Stefan Schubert

Facts & EventsThe winners of the Berlin Brandenburg Innovation Award 2024 have been announced. A total of 125 companies, teams, and collaborations from science and…→

© Agentur Medienlabor | Stefan Schubert

Facts & EventsThe winners of the Berlin Brandenburg Innovation Award 2024 have been announced. A total of 125 companies, teams, and collaborations from science and…→Innovation Award 2024: Winners

-

Facts & Events Transfer – Stories

© Berlin Partner

Facts & Events Transfer – StoriesThe fourth Transfer Week Berlin-Brandenburg from November 25 to 29 will focus on the latest developments in regional transfer activities. 62 partner…→

© Berlin Partner

Facts & Events Transfer – StoriesThe fourth Transfer Week Berlin-Brandenburg from November 25 to 29 will focus on the latest developments in regional transfer activities. 62 partner…→The future of knowledge transfer: Transfer Week 2024

-

Facts & Events

© Falling Walls Foundation

Facts & EventsFrom 1 to 10 November Brain City Berlin will once again be in the spotlight. The varied programme of the 9th Berlin Science Week includes more than…→

© Falling Walls Foundation

Facts & EventsFrom 1 to 10 November Brain City Berlin will once again be in the spotlight. The varied programme of the 9th Berlin Science Week includes more than…→Finding common ground: Berlin Science Week 2024

-

Facts & Events Transfer – Stories

© CeRRI 2024

Facts & Events Transfer – StoriesTransfer activities and research do not compete with each other. On the contrary! This is one of the key findings of the “Transfer 1000” study…→

© CeRRI 2024

Facts & Events Transfer – StoriesTransfer activities and research do not compete with each other. On the contrary! This is one of the key findings of the “Transfer 1000” study…→„Transfer 1000“: Study on science transfer

-

Facts & Events Transfer – Stories

© HTW/ZfS

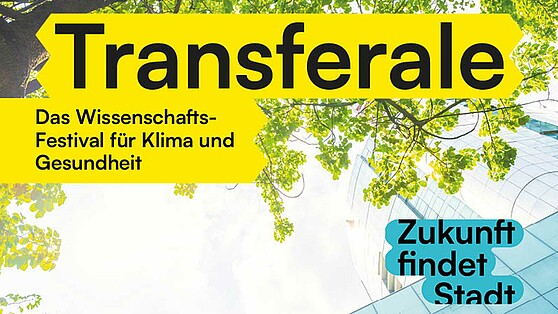

Facts & Events Transfer – StoriesClimate, health and sustainability – these are the main topics of Transferale. From 25 to 27 September, the science and transfer festival will be held…→

© HTW/ZfS

Facts & Events Transfer – StoriesClimate, health and sustainability – these are the main topics of Transferale. From 25 to 27 September, the science and transfer festival will be held…→Ideas for Berlin’s future

-

Facts & Events

© Adobe Stock/stockartstudio

Facts & EventsThe Humboldt-Universität zu Berlin has almost doubled the proportion of women in professorships in around 15 years. The gender ratio among academic…→

© Adobe Stock/stockartstudio

Facts & EventsThe Humboldt-Universität zu Berlin has almost doubled the proportion of women in professorships in around 15 years. The gender ratio among academic…→HU Berlin: more women in science

-

Facts & Events

© Max Delbrück Center / Felix Petermann

Facts & EventsDr. Gabriele Schiattarella has been presented with the “Outstanding Investigator Award” by the International Society for Heart Research ISHR. The…→

© Max Delbrück Center / Felix Petermann

Facts & EventsDr. Gabriele Schiattarella has been presented with the “Outstanding Investigator Award” by the International Society for Heart Research ISHR. The…→Berlin heart researcher honoured

-

Facts & Events

firefly.adobe (AI-generated)

Facts & EventsA research team from the “Global Lake Ecological Observatory Network” (GLEON) has summarised which factors can curb blue-green algae. Researchers from…→

firefly.adobe (AI-generated)

Facts & EventsA research team from the “Global Lake Ecological Observatory Network” (GLEON) has summarised which factors can curb blue-green algae. Researchers from…→Heavy rain, fish and bacteria: What stops blue-green algae?

-

Facts & Events

© TU Berlin / Philipp Arnoldt

Facts & EventsThis will be possible in the joint university library of TU Berlin and UdK Berlin from January 2025. The Berlin Senate approved the model project for…→

© TU Berlin / Philipp Arnoldt

Facts & EventsThis will be possible in the joint university library of TU Berlin and UdK Berlin from January 2025. The Berlin Senate approved the model project for…→Studying in the university library 24/7

-

Facts & Events

© Max Delbrück Center / Stefanie Loos

Facts & EventsOn the evening of 22 June, Brain City Berlin will once again present itself in all its diversity. The motto of the LNDW 2024: “Experience. Understand.…→

© Max Delbrück Center / Stefanie Loos

Facts & EventsOn the evening of 22 June, Brain City Berlin will once again present itself in all its diversity. The motto of the LNDW 2024: “Experience. Understand.…→Long Night of Science 2024

-

Facts & Events

© Landesarchiv Berlin/Wunstorf

Facts & EventsThe linguist was honoured with the Berlin Science Prize 2023 by the Governing Mayor of Berlin, Kai Wegner on May 27. The Young Talent Prize went to…→

© Landesarchiv Berlin/Wunstorf

Facts & EventsThe linguist was honoured with the Berlin Science Prize 2023 by the Governing Mayor of Berlin, Kai Wegner on May 27. The Young Talent Prize went to…→Berlin Science Prize 2023 for Artemis Alexiadou

-

Facts & Events

© mit:forschen!

Facts & EventsScientists from all disciplines can still be nominated until 3 June for the “Wissen der Vielen – Forschungspreis für Citizen Science” 2024.→

© mit:forschen!

Facts & EventsScientists from all disciplines can still be nominated until 3 June for the “Wissen der Vielen – Forschungspreis für Citizen Science” 2024.→Wanted: excellent publications on citizen science!

-

Facts & Events

© HWR Berlin/Lukas Schramm

Facts & EventsStudy – yes. But at which university? And above all: which subject? This year, Berlin’s universities and colleges are once again inviting students to…→

© HWR Berlin/Lukas Schramm

Facts & EventsStudy – yes. But at which university? And above all: which subject? This year, Berlin’s universities and colleges are once again inviting students to…→Student Information Days 2024

-

Facts & Events

© Berlin University Alliance

Facts & Events“The Open Knowledge Laboratory – for the great transformations of our time”: With a new campaign, the Berlin University Alliance is making…→

© Berlin University Alliance

Facts & Events“The Open Knowledge Laboratory – for the great transformations of our time”: With a new campaign, the Berlin University Alliance is making…→Berlin University Alliance launches a new campaign

-

Facts & Events

© Retusche: Ralitsa Kirova/Wissenschaft im Dialog CCBY-SA4.0

Facts & EventsFrom 14 May, the floating science centre “MS Wissenschaft” will once again embark on a long voyage through Germany with an interactive exhibition on…→

© Retusche: Ralitsa Kirova/Wissenschaft im Dialog CCBY-SA4.0

Facts & EventsFrom 14 May, the floating science centre “MS Wissenschaft” will once again embark on a long voyage through Germany with an interactive exhibition on…→All aboard! MS Wissenschaft on tour

-

Facts & Events

© Agentur Medienlabor

Facts & EventsThe application phase is open: Until July 8, companies, start-ups and craft businesses based in the Capital Region can submit their documents for the…→

© Agentur Medienlabor

Facts & EventsThe application phase is open: Until July 8, companies, start-ups and craft businesses based in the Capital Region can submit their documents for the…→Starting signal for the Berlin Brandenburg Innovation Award 2024

-

Facts & Events

© xg-incubator.com

Facts & EventsUntil March 31, start-ups and founders can apply for the new xG-Incubator of TU Berlin and Fraunhofer HHI.→

© xg-incubator.com

Facts & EventsUntil March 31, start-ups and founders can apply for the new xG-Incubator of TU Berlin and Fraunhofer HHI.→Apply now! Ideas for the future of communication

-

Facts & Events

Shutterstock © LightField Studios

Facts & EventsHU Berlin, TU Berlin, ASH Berlin, BHT and HfS Ernst Busch – these Berlin universities have been selected for funding in the first round of the Joint…→

Shutterstock © LightField Studios

Facts & EventsHU Berlin, TU Berlin, ASH Berlin, BHT and HfS Ernst Busch – these Berlin universities have been selected for funding in the first round of the Joint…→Professorinnenprogramm 2030: 5 Berlin universities selected

-

Facts & Events

© HTW Berlin/Chris Hartung

Facts & EventsFrom 12 to 16 February, the Career Services of the universities and colleges in Berlin and Brandenburg invite you to the first joint Career Week. It…→

© HTW Berlin/Chris Hartung

Facts & EventsFrom 12 to 16 February, the Career Services of the universities and colleges in Berlin and Brandenburg invite you to the first joint Career Week. It…→Tips and information on starting a career

-

Facts & Events Transfer – Stories

TU Berlin © Felix Noak

Facts & Events Transfer – StoriesInterdisciplinary research teams have until 29 April to submit their proposals for the Next Grand Challenge initiative of the Berlin University…→

TU Berlin © Felix Noak

Facts & Events Transfer – StoriesInterdisciplinary research teams have until 29 April to submit their proposals for the Next Grand Challenge initiative of the Berlin University…→Next Grand Challenge: apply now!

-

Facts & Events

LNDW / Freie Universität Berlin © Rolf Schulten

Facts & EventsWhether it’s about freedom, time or knowledge transfer – the Brain City Berlin calendar of events in 2024 will once again include many exciting topics…→

LNDW / Freie Universität Berlin © Rolf Schulten

Facts & EventsWhether it’s about freedom, time or knowledge transfer – the Brain City Berlin calendar of events in 2024 will once again include many exciting topics…→Brain City Berlin 2024: our Top 10

-

Facts & Events Transfer – Stories

© Alfred-Wegener-Institut/Micheal Gutsche (CC-BY 4.0)

Facts & Events Transfer – StoriesA special exhibition at the Deutsches Technikmuseum makes things crystal clear: There is little time left to save the Arctic.→

© Alfred-Wegener-Institut/Micheal Gutsche (CC-BY 4.0)

Facts & Events Transfer – StoriesA special exhibition at the Deutsches Technikmuseum makes things crystal clear: There is little time left to save the Arctic.→Exhibition tip: “Thin ice”

-

Facts & Events Transfer – Stories

© BPWT

Facts & Events Transfer – StoriesWith an all-day kick-off conference in the stilwerk KantGaragen, the Transfer Week Berlin-Brandenburg 2023 starts. From 20 to 24 November, the event…→

© BPWT

Facts & Events Transfer – StoriesWith an all-day kick-off conference in the stilwerk KantGaragen, the Transfer Week Berlin-Brandenburg 2023 starts. From 20 to 24 November, the event…→“Science x Business”: Transfer Week 2023

-

Facts & Events Transfer – Stories

© Falling Walls Foundation

Facts & Events Transfer – StoriesBerlin Science Week is back from November 1 to 10. New this year: The ART & SCIENCE FORUM at Holzmarkt 25 is the central location of the science…→

© Falling Walls Foundation

Facts & Events Transfer – StoriesBerlin Science Week is back from November 1 to 10. New this year: The ART & SCIENCE FORUM at Holzmarkt 25 is the central location of the science…→With a focus on art & science: Berlin Science Week 2023

-

Facts & Events

© SPB/Natalie Toczek

Facts & Events100 years of the Planetarium: On 21 October, the Zeiss-Großplanetarium celebrates the star show anniversary with a colourful program of astronomy,…→

© SPB/Natalie Toczek

Facts & Events100 years of the Planetarium: On 21 October, the Zeiss-Großplanetarium celebrates the star show anniversary with a colourful program of astronomy,…→Travel to space for free

-

Facts & Events Transfer – Stories

© Peter Himsel/Campus Berlin-Buch GmbH

Facts & Events Transfer – StoriesBrain City Berlin has a new start-up centre: The BerlinBioCube on the Campus Berlin-Buch includes 8,000 square metres of modern laboratory and office…→

© Peter Himsel/Campus Berlin-Buch GmbH

Facts & Events Transfer – StoriesBrain City Berlin has a new start-up centre: The BerlinBioCube on the Campus Berlin-Buch includes 8,000 square metres of modern laboratory and office…→The BerlinBioCube is open

-

Facts & Events

© FU Berlin/Bernd Wannenmacher

Facts & Events“Oral-History.Digital” is the name of a portal that went online this week. The University Library of the FU Berlin is a partner in the project funded…→

© FU Berlin/Bernd Wannenmacher

Facts & Events“Oral-History.Digital” is the name of a portal that went online this week. The University Library of the FU Berlin is a partner in the project funded…→New platform for contemporary witness interviews

-

Facts & Events Transfer – Stories

© HU Berlin

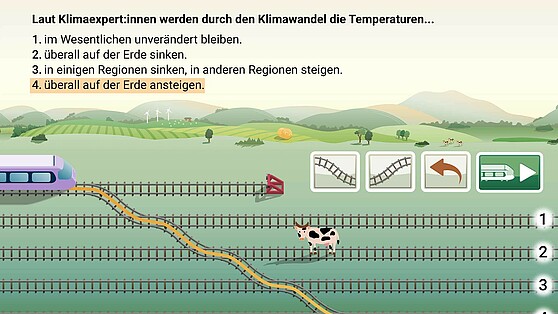

Facts & Events Transfer – Stories“TRAIN 4 Science” encourages children, but also adults, to deal with climate change in a playful way. The app was developed in the Brain City Berlin…→

© HU Berlin

Facts & Events Transfer – Stories“TRAIN 4 Science” encourages children, but also adults, to deal with climate change in a playful way. The app was developed in the Brain City Berlin…→On the virtual train to a sustainable future

-

Facts & Events

© DLR. All rights reserved

Facts & EventsIn the "DLR_School_LAB Online Observatory" schoolchildren across Germany can now observe the sun live online.→

© DLR. All rights reserved

Facts & EventsIn the "DLR_School_LAB Online Observatory" schoolchildren across Germany can now observe the sun live online.→Travel to the sun from the classroom

-

Facts & Events

LNDM: Kulturprojekte © Christian Kielmann

Facts & EventsDo you get bored during the holidays? The Brain City Berlin offers a whole load of variety! Here are our holiday favourites.→

LNDM: Kulturprojekte © Christian Kielmann

Facts & EventsDo you get bored during the holidays? The Brain City Berlin offers a whole load of variety! Here are our holiday favourites.→10 tips for the summer

-

Facts & Events Transfer – Stories

© BHT/Zarko Martovic

Facts & Events Transfer – StoriesOn 17 June, more than 60 scientific and science-related institutions in the Brain City Berlin and Potsdam will open their doors for the “Long Night of…→

© BHT/Zarko Martovic

Facts & Events Transfer – StoriesOn 17 June, more than 60 scientific and science-related institutions in the Brain City Berlin and Potsdam will open their doors for the “Long Night of…→Lange Nacht der Wissenschaften 2023

-

Facts & Events

© FU Berlin / Svea Pietschmann

Facts & Events"Free thinking. Forming responsibility. Shaping change." Under this motto, the Freie Universität Berlin is celebrating its 75th birthday this year…→

© FU Berlin / Svea Pietschmann

Facts & Events"Free thinking. Forming responsibility. Shaping change." Under this motto, the Freie Universität Berlin is celebrating its 75th birthday this year…→75 Years of Freie Universität Berlin

-

Facts & Events Transfer – Stories

© BSBI

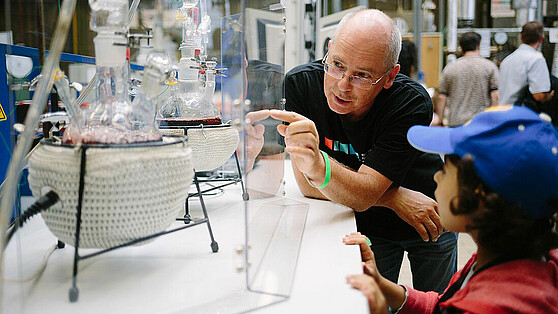

Facts & Events Transfer – StoriesOn 24 June the AI scene will meet in Berlin-Neukölln. The “1st International Conference on Artificial Intelligence” at the BSBI is primarily about the…→

© BSBI

Facts & Events Transfer – StoriesOn 24 June the AI scene will meet in Berlin-Neukölln. The “1st International Conference on Artificial Intelligence” at the BSBI is primarily about the…→AI Conference at the Berlin School of Business & Innovation

-

Facts & Events

![[Translate to English:] © David Ausserhofer Prof. Dr. Bénédicte Savoy, Brain City Berlin](/fileadmin/_processed_/4/b/csm_1920x1080_Benedicte_Savoy_c_David-Ausserhofer_bb0b0ad647.jpg) [Translate to English:] © David Ausserhofer

Facts & EventsThe Young Scientist Award (Nachwuchspreis) also went to a researcher from TU Berlin: Dr. Anja Maria Wagemans.→

[Translate to English:] © David Ausserhofer

Facts & EventsThe Young Scientist Award (Nachwuchspreis) also went to a researcher from TU Berlin: Dr. Anja Maria Wagemans.→TU professor Dr. Bénédicte Savoy receives Berliner Wissenschaftspreis

-

Facts & Events

© ASH Berlin

Facts & EventsThe new extension building of ASH Berlin will create urgently needed space for around 1,700 students and a new refectory. The building is scheduled…→

© ASH Berlin

Facts & EventsThe new extension building of ASH Berlin will create urgently needed space for around 1,700 students and a new refectory. The building is scheduled…→Alice Salomon University celebrates topping-out ceremony

-

Facts & Events Innovations

© Brand, Gao, Hamann, Martineck, Stangl/Charité

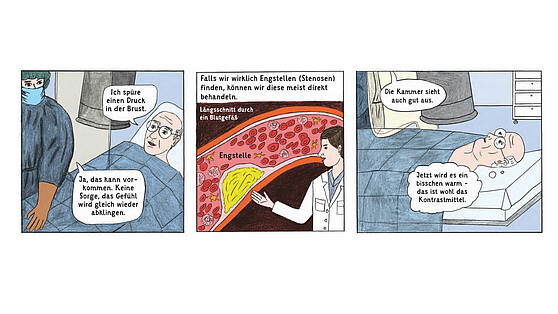

Facts & Events InnovationsThe Charité-Universitätsmedizin Berlin wants to inform patients with a comic before a heart catheter examination. As part of a study, the…→

© Brand, Gao, Hamann, Martineck, Stangl/Charité

Facts & Events InnovationsThe Charité-Universitätsmedizin Berlin wants to inform patients with a comic before a heart catheter examination. As part of a study, the…→A picture is worth a thousand words

-

Facts & Events Transfer – Stories

© BVG/Andreas Süß

Facts & Events Transfer – StoriesWhat does an electric bus sound like? Lukas Esser, a student at Berlin University of the Arts, has developed the new sound for Germany’s electric…→

© BVG/Andreas Süß

Facts & Events Transfer – StoriesWhat does an electric bus sound like? Lukas Esser, a student at Berlin University of the Arts, has developed the new sound for Germany’s electric…→Electric sound of the future

-

Facts & Events

© DFG/David Ausserhofer

Facts & EventsHumanities scholar Prof. Dr. Anita Traninger received the Award from the German Research Foundation (DFG) last Wednesday in Brain City Berlin.→

© DFG/David Ausserhofer

Facts & EventsHumanities scholar Prof. Dr. Anita Traninger received the Award from the German Research Foundation (DFG) last Wednesday in Brain City Berlin.→Leibniz Award for FU Professor Anita Traninger

-

Facts & Events Transfer – Stories

© Maschinenraum

Facts & Events Transfer – StoriesThe University of Applied Sciences wants to tap additional transfer potential by means of cooperation with the nationwide network of SMEs.→

© Maschinenraum

Facts & Events Transfer – StoriesThe University of Applied Sciences wants to tap additional transfer potential by means of cooperation with the nationwide network of SMEs.→HTW Berlin cooperates with Maschinenraum

-

Facts & Events Transfer – Stories

© Berlin Partner

Facts & Events Transfer – StoriesWith a new image film Brain City Berlin starts the year 2023. Our Brain City Ambassadors are the protagonists of the video.→

© Berlin Partner

Facts & Events Transfer – StoriesWith a new image film Brain City Berlin starts the year 2023. Our Brain City Ambassadors are the protagonists of the video.→“We are Brain City Berlin”

-

Facts & Events

© DGZfP

Facts & EventsMany exciting and high-calibre events are once again on the Brain City Berlin event calendar this year. We present our favourites to you.→

© DGZfP

Facts & EventsMany exciting and high-calibre events are once again on the Brain City Berlin event calendar this year. We present our favourites to you.→Brain City Berlin 2023: the Top 10 Events

-

Facts & Events

SOWG © Sarah Rauch/LOC

Facts & Events#TogetherUnbeatable: The Special Olympics World Games 2023 will be held in the sports metropolis of Berlin from 17 to 25 June. Researchers can…→

SOWG © Sarah Rauch/LOC

Facts & Events#TogetherUnbeatable: The Special Olympics World Games 2023 will be held in the sports metropolis of Berlin from 17 to 25 June. Researchers can…→Special Olympics World Games Berlin: join a researcher!

-

Facts & Events

© Agentur Medienlabor/Benjamin Maltry

Facts & EventsThe jury presented five awards and one special award for particularly innovative products, concepts and solutions from the capital region.→

© Agentur Medienlabor/Benjamin Maltry

Facts & EventsThe jury presented five awards and one special award for particularly innovative products, concepts and solutions from the capital region.→Berlin Brandenburg Innovation Award: the winners 2022

-

Facts & Events Transfer – Stories

© Transfer Week

Facts & Events Transfer – StoriesFrom 21 to 25 November, scientists can once again discuss future-oriented topics on a practical level together with companies from Berlin and…→

© Transfer Week

Facts & Events Transfer – StoriesFrom 21 to 25 November, scientists can once again discuss future-oriented topics on a practical level together with companies from Berlin and…→Providing impulses for cooperation: Transfer Week 2022

-

Facts & Events

© Dirk Lamprecht / Visual Noise

Facts & EventsThe KinderUni Lichtenberg is celebrating its 20th anniversary this year with a “KUL Science Day” and an exciting series of lectures.→

© Dirk Lamprecht / Visual Noise

Facts & EventsThe KinderUni Lichtenberg is celebrating its 20th anniversary this year with a “KUL Science Day” and an exciting series of lectures.→From “Sweet, tasty – and dangerous” to “Riding a bike hands-free” - KUL lectures for children

-

Facts & Events Transfer – Stories

© Falling Walls Foundation

Facts & Events Transfer – StoriesAn interview with Christine Brummer, director of Berlin Science Week.→

© Falling Walls Foundation

Facts & Events Transfer – StoriesAn interview with Christine Brummer, director of Berlin Science Week.→Opening up science for dialogue with society

-

Facts & Events

© Senatsverwaltung für Wissenschaft, Gesundheit, Pflege und Gleichstellung

Facts & EventsProposals can be submitted until 14 November 2022. The award, presented by the Senate Department for Higher Education and Research, Health, Long-Term…→

© Senatsverwaltung für Wissenschaft, Gesundheit, Pflege und Gleichstellung

Facts & EventsProposals can be submitted until 14 November 2022. The award, presented by the Senate Department for Higher Education and Research, Health, Long-Term…→Berliner Frauenpreis 2023

-

Facts & Events

@ BUA

Facts & EventsTeenagers aged 14 to 18 and researchers and students from Brain City Berlin are invited to submit suggestions to the Berlin University Alliance for…→

@ BUA

Facts & EventsTeenagers aged 14 to 18 and researchers and students from Brain City Berlin are invited to submit suggestions to the Berlin University Alliance for…→Berlin University Alliance: Ideas wanted!

-

Facts & Events

Futurium © David von Becker

Facts & EventsSummer holidays – six weeks in which we can explore Brain City Berlin in a completely new way. And not just at the Wannsee or Müggelsee. There are…→

Futurium © David von Becker

Facts & EventsSummer holidays – six weeks in which we can explore Brain City Berlin in a completely new way. And not just at the Wannsee or Müggelsee. There are…→5 Tips for the Holidays

-

Facts & Events

Image: Berlin University Alliance

Facts & Events"Wissen aus Berlin" (Knowledge from Berlin) is the name of a YouTube channel of the Berlin University Alliance. There is a new episode every Tuesday.→

Image: Berlin University Alliance

Facts & Events"Wissen aus Berlin" (Knowledge from Berlin) is the name of a YouTube channel of the Berlin University Alliance. There is a new episode every Tuesday.→Knowledge from Berlin

-

Facts & Events

Credit: Michael Kompe @ HWR Berlin

Facts & EventsA joint application from five Berlin universities and an individual application bei ASH Berlin for funding under the nationwide "Innovative…→

Credit: Michael Kompe @ HWR Berlin

Facts & EventsA joint application from five Berlin universities and an individual application bei ASH Berlin for funding under the nationwide "Innovative…→“Innovative University” Funding Programme: 2 Berlin Applications Successful

-

Facts & Events

©Agentur Medienlabor/Adam Sevens

Facts & EventsProducts, concepts and solutions are sought that exemplify the innovative capabilities and economic strength of the capital region. Companies based in…→

©Agentur Medienlabor/Adam Sevens

Facts & EventsProducts, concepts and solutions are sought that exemplify the innovative capabilities and economic strength of the capital region. Companies based in…→Berlin Brandenburg Innovation Award 2022: Apply by 4 July

-

Facts & Events

Photo: Heiner Witte/Wissenschaft im Dialog

Facts & EventsFull steam ahead! From 3 to 8 May, the ‘MS Wissenschaft’ will be anchoring at the Schiffbauerdamm in Brain City Berlin.→

Photo: Heiner Witte/Wissenschaft im Dialog

Facts & EventsFull steam ahead! From 3 to 8 May, the ‘MS Wissenschaft’ will be anchoring at the Schiffbauerdamm in Brain City Berlin.→Exhibition tip: MS Wissenschaft in Berlin

-

Facts & Events

HU Berlin/Matthias Heyde

Facts & EventsDecipher research via hidden object pictures: Since March, the HU Berlin is offering guided tours through the “Bahnhof der Wissenschaften” (“Station…→

HU Berlin/Matthias Heyde

Facts & EventsDecipher research via hidden object pictures: Since March, the HU Berlin is offering guided tours through the “Bahnhof der Wissenschaften” (“Station…→Exhibition tip: Science Underground Tour

-

Facts & Events

Photo: © Falling Walls Foundation

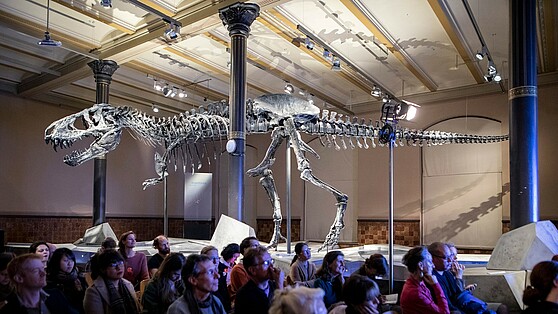

Facts & EventsWhether the Berlin Science Week, the Lange Nacht der Wissenschaften or the return of the dinosaur star Tristan Otto to Berlin: This year, the Brain…→

Photo: © Falling Walls Foundation

Facts & EventsWhether the Berlin Science Week, the Lange Nacht der Wissenschaften or the return of the dinosaur star Tristan Otto to Berlin: This year, the Brain…→Brain City Highlights 2022: Our Top 10

-

Facts & Events

© wörner traxler richter planungsgesellschaft mbH

Facts & EventsThe Charité - Universitätsmedizin Berlin and the German Heart Center Berlin (DHZB) will pool their competencies from January 2023. By 2028, one of the…→

© wörner traxler richter planungsgesellschaft mbH

Facts & EventsThe Charité - Universitätsmedizin Berlin and the German Heart Center Berlin (DHZB) will pool their competencies from January 2023. By 2028, one of the…→New Heart Center for Berlin

-

Facts & Events

Photo: LAGeSo/Dirk Laessig

Facts & EventsCaroline Frädrich and Prof. Dr Josef Köhrle from the Institute of Experimental Endocrinology at the Charité – Universitätsmedizin Berlin along with Dr…→

Photo: LAGeSo/Dirk Laessig

Facts & EventsCaroline Frädrich and Prof. Dr Josef Köhrle from the Institute of Experimental Endocrinology at the Charité – Universitätsmedizin Berlin along with Dr…→“Berlin Research Award for Alternatives to Animal Experiments”: Award-winning Project by Charité and the BfR

-

Facts & Events

© WZB/David Ausserhofer

Facts & EventsThe political scientist Michael Zürn has been awarded the Berliner Wissenschaftspreis 2021. The Nachwuchspreis went to the theologian Mira Sievers.→

© WZB/David Ausserhofer

Facts & EventsThe political scientist Michael Zürn has been awarded the Berliner Wissenschaftspreis 2021. The Nachwuchspreis went to the theologian Mira Sievers.→Berliner Wissenschaftspreis 2021 for Michael Zürn

-

Facts & Events

Prof. Claudia Langenberg; Prof. Marlis Dürkop-Leptihn; Prof. Gudrun Erzgräber, der Regierende Bürgermeister Michael Müller und Prof. Gesche Joost (v. li. n. re.), Foto: BIH/Konstantin Börner

Facts & EventsEmmanuelle Charpentier, Lise Meitner, Cécile Vogt – an exhibition in the Rotes Rathaus presents 20 women pioneers from Brain City Berlin – and thereby…→

Prof. Claudia Langenberg; Prof. Marlis Dürkop-Leptihn; Prof. Gudrun Erzgräber, der Regierende Bürgermeister Michael Müller und Prof. Gesche Joost (v. li. n. re.), Foto: BIH/Konstantin Börner

Facts & EventsEmmanuelle Charpentier, Lise Meitner, Cécile Vogt – an exhibition in the Rotes Rathaus presents 20 women pioneers from Brain City Berlin – and thereby…→Exhibition: “Berlin – Capital of Women Scientists”

-

Facts & Events

Credit: Element5 Digital on Unsplash

Facts & EventsFor three semesters, teaching and studying in the Brain City Berlin took place predominantly online. The lecture halls, seminar rooms, laboratories…→

Credit: Element5 Digital on Unsplash

Facts & EventsFor three semesters, teaching and studying in the Brain City Berlin took place predominantly online. The lecture halls, seminar rooms, laboratories…→Good Prerequisites: Semester Start 2021/22

-

Facts & Events

Credit: Tag der Deutschen Einheit Halle (Saale)

Facts & EventsOn 3 October, Brain City Berlin will be represented with the “Berlin Cube” at the EinheitsEXPO in Saxony-Anhalt.→

Credit: Tag der Deutschen Einheit Halle (Saale)

Facts & EventsOn 3 October, Brain City Berlin will be represented with the “Berlin Cube” at the EinheitsEXPO in Saxony-Anhalt.→#BRAINCITYBERLIN at the Day of German Unity

-

Facts & Events

Facts & EventsThe Berlin universities of applied sciences – four State and two denominational – are celebrating their anniversaries. On the occasion of the joint…→

Facts & EventsThe Berlin universities of applied sciences – four State and two denominational – are celebrating their anniversaries. On the occasion of the joint…→50 Years – 50 Stories

-

Facts & Events

Facts & EventsWith the science fair “Tabula rasa - Science you can touch”, Urania Berlin offers 40 young scientists a public forum. The application deadline is 1…→

Facts & EventsWith the science fair “Tabula rasa - Science you can touch”, Urania Berlin offers 40 young scientists a public forum. The application deadline is 1…→Wanted: innovative and creative ideas from science and research!

-

Facts & Events

Credit: Lars Hübner

Facts & EventsBrain City Ambassador Professor Dr. Christian Drosten has been awarded the Berliner Wissenschaftspreis 2020 for his outstanding research achievements.…→

Credit: Lars Hübner

Facts & EventsBrain City Ambassador Professor Dr. Christian Drosten has been awarded the Berliner Wissenschaftspreis 2020 for his outstanding research achievements.…→Berliner Wissenschaftspreis 2020 for Christian Drosten

-

Facts & Events

Credit: Harf Zimmermann/3-D-Visualisierung: Tonio Freitag

Facts & EventsBerlin celebrates science. We’ve put together a few highlights for you.→

Credit: Harf Zimmermann/3-D-Visualisierung: Tonio Freitag

Facts & EventsBerlin celebrates science. We’ve put together a few highlights for you.→"Wissensstadt Berlin 2021" – Our Top 10 Events

-

Facts & Events

Foto: @Harf Zimmermann, 3-D-Visualisierung@ Tonio Freitag

Facts & Events“Berlin wants to know”: “Wissensstadt Berlin 2021” is getting underway with a large open-air exhibition in front of the Rotes Rathaus.→

Foto: @Harf Zimmermann, 3-D-Visualisierung@ Tonio Freitag

Facts & Events“Berlin wants to know”: “Wissensstadt Berlin 2021” is getting underway with a large open-air exhibition in front of the Rotes Rathaus.→“Wissensstadt Berlin 2021” – Festival of Research

-

Facts & Events

Facts & EventsAs part of the “Creative Cities Challenge”, the “Global Innovation Collaborative” is now looking for innovative solutions that will contribute to the…→

Facts & EventsAs part of the “Creative Cities Challenge”, the “Global Innovation Collaborative” is now looking for innovative solutions that will contribute to the…→Apply until August 3: Call for Competition Entries “Creative Cities Challenge 2021”

-

Facts & Events

Facts & EventsCurrent technological developments in the field of life sciences, exchanges and networking will be the focus of BIONNALE on 12 May.→

Facts & EventsCurrent technological developments in the field of life sciences, exchanges and networking will be the focus of BIONNALE on 12 May.→BIONNALE 2021 – register now!

-

Facts & Events

Credit: ThisisEngeneering RAEng on Unsplash

Facts & EventsIt is considered to be one of the most important instruments of Berlin's higher education equality policy: the “Berliner Chancengleichheitsprogramm”…→

Credit: ThisisEngeneering RAEng on Unsplash

Facts & EventsIt is considered to be one of the most important instruments of Berlin's higher education equality policy: the “Berliner Chancengleichheitsprogramm”…→Berlin Equal Opportunities Programme: 6 more years

-

Facts & Events

Credit: Berlin Partner/Wüstenhagen

Facts & EventsThe online event “Redefining the Smart City” on 23 and 24 March is inviting international researchers and Smart City experts to various workshops.The…→

Credit: Berlin Partner/Wüstenhagen

Facts & EventsThe online event “Redefining the Smart City” on 23 and 24 March is inviting international researchers and Smart City experts to various workshops.The…→International symposium on the subject of Smart Cities

-

Facts & Events

ThisisEngineering RAEng on Unsplash

Facts & EventsBerlin universities are above the national average in terms of equality. In 2020, the state universities in the Brain City Berlin filled around half…→

ThisisEngineering RAEng on Unsplash

Facts & EventsBerlin universities are above the national average in terms of equality. In 2020, the state universities in the Brain City Berlin filled around half…→Capital of Female Professors

-

Facts & Events

FU Berlin © Stephan Niespodziany, www.rejoyce.berlin

Facts & EventsDigital, hybrid, or live in-person: in 2021, events in the Brain City Berlin will still be shaped by the restrictions of the coronavirus pandemic.…→

FU Berlin © Stephan Niespodziany, www.rejoyce.berlin

Facts & EventsDigital, hybrid, or live in-person: in 2021, events in the Brain City Berlin will still be shaped by the restrictions of the coronavirus pandemic.…→Brain City Berlin: Top 10 Events in 2021

-

Facts & Events

Matthias Heyde, HU Berlin

Facts & EventsThe new Unter den Linden underground station is currently teeming with science. An exhibition organised by the Humboldt-Universität zu Berlin shows…→

Matthias Heyde, HU Berlin

Facts & EventsThe new Unter den Linden underground station is currently teeming with science. An exhibition organised by the Humboldt-Universität zu Berlin shows…→Exhibition tip: Science Underground

-

Facts & Events

left: TU Berlin, Pressestelle / right: Marten Körner

Facts & EventsSeveral researchers in Brain City Berlin have recently received top-class prize grants. Gottfried Wilhelm Leibniz Prizes were awarded to Prof. Dr…→

left: TU Berlin, Pressestelle / right: Marten Körner

Facts & EventsSeveral researchers in Brain City Berlin have recently received top-class prize grants. Gottfried Wilhelm Leibniz Prizes were awarded to Prof. Dr…→7 research awards for Berlin scientists

-

Facts & Events

Berlin Science Week

Facts & EventsWith more than 200 events and over 500 speakers, this year’s Berlin Science Week is bigger than ever before. However, the 2020 edition of the…→

Berlin Science Week

Facts & EventsWith more than 200 events and over 500 speakers, this year’s Berlin Science Week is bigger than ever before. However, the 2020 edition of the…→Berlin Science Week 2020 – Digital and Global

-

Facts & Events

MPG © Hallbauer und Fioretti

Facts & EventsThe Royal Swedish Academy of Sciences has given top Berlin researcher Professor Dr Emmanuelle Charpentier and American Professor Dr Jennifer A. Doudna…→

MPG © Hallbauer und Fioretti

Facts & EventsThe Royal Swedish Academy of Sciences has given top Berlin researcher Professor Dr Emmanuelle Charpentier and American Professor Dr Jennifer A. Doudna…→2020 Nobel Prize in Chemistry goes to Emmanuelle Charpentier: Congratulations!

-

Facts & Events

©Mario Gogh/Unsplash

Facts & EventsStart-ups arising from research being done at Berlin's universities are of major importance for the region's economy. As the “Gruendungsumfrage 2020”…→

©Mario Gogh/Unsplash

Facts & EventsStart-ups arising from research being done at Berlin's universities are of major importance for the region's economy. As the “Gruendungsumfrage 2020”…→Academic start-ups strengthen Berlin's economy

-

Facts & Events

©Samuel Henne

Facts & EventsThe exhibition MACHT NATUR at STATE Studio in Berlin focuses on the discomfort that many of us experience when faced with human intervention in…→

©Samuel Henne

Facts & EventsThe exhibition MACHT NATUR at STATE Studio in Berlin focuses on the discomfort that many of us experience when faced with human intervention in…→MACHT NATUR: an exhibition that asks questions

-

Facts & Events

©visitBerlin/Sarah Lindemann

Facts & EventsReal-world Laboratories are bringing scientists together with practitioners to develop solutions to the questions of tomorrow in an experimental…→

©visitBerlin/Sarah Lindemann

Facts & EventsReal-world Laboratories are bringing scientists together with practitioners to develop solutions to the questions of tomorrow in an experimental…→The "StadtManufaktur Berlin" Exhibition: experiments to create a city worth living in

-

Facts & Events

LNDW/Irmisch

Facts & EventsThe Long Night of Sciences (Lange Nacht der Wissenschaften) has long been one of the highlights of the Brain City Berlin event calendar every June.…→

LNDW/Irmisch

Facts & EventsThe Long Night of Sciences (Lange Nacht der Wissenschaften) has long been one of the highlights of the Brain City Berlin event calendar every June.…→Long Night of the Sciences: this year as a podcast series

-

Facts & Events

![[Translate to English:] ©Fotowerk – AdobeStock [Translate to English:] Pflegerein mit Senior](/fileadmin/_processed_/0/7/csm_1920x1080xFotolia_62765109_XXL_drubig-photo_0aa30ba456.jpg) [Translate to English:] ©Fotowerk – AdobeStock

Facts & EventsThree new health care courses will start in the winter semester 2020/21 at the Alice Salomon Hochschule Berlin (ASH Berlin).→

[Translate to English:] ©Fotowerk – AdobeStock

Facts & EventsThree new health care courses will start in the winter semester 2020/21 at the Alice Salomon Hochschule Berlin (ASH Berlin).→Apply now - 3 new health degree courses at the ASH Berlin

-

Facts & Events

© Futurium/Ali Ghandtschi

Facts & EventsFor all those who have not yet planned anything specific for the weekend - here are a few tips from the Brain City editorial team combining leisure…→

© Futurium/Ali Ghandtschi

Facts & EventsFor all those who have not yet planned anything specific for the weekend - here are a few tips from the Brain City editorial team combining leisure…→5 tips: Weekend in Brain City Berlin

-

Facts & Events

Fotocredit: #MIT Covid-19 Challenge

Facts & EventsApply for the #MIT Hackathon "Beat the Pandemic II" until 26 May! Experts from different fields are sought.→

Fotocredit: #MIT Covid-19 Challenge

Facts & EventsApply for the #MIT Hackathon "Beat the Pandemic II" until 26 May! Experts from different fields are sought.→"Beat the Pandemic II": Join the MIT COVID-19 Challenge

-

Facts & Events

©BIT6

Facts & EventsThe Coronvirusa crisis poses great challenges, especially for small and medium-sized companies. On 20 May, scientific experts will speak all day in…→

©BIT6

Facts & EventsThe Coronvirusa crisis poses great challenges, especially for small and medium-sized companies. On 20 May, scientific experts will speak all day in…→TRAO – digital transfer day on the 20th of May

-

Facts & Events

©Paul Hahn Photography

Facts & EventsBerlin's Senator for Economics, Ramona Pop, and Brandenburg's Minister of Economic Affairs, Jörgs Steinbach, have once again announced the search for…→

©Paul Hahn Photography

Facts & EventsBerlin's Senator for Economics, Ramona Pop, and Brandenburg's Minister of Economic Affairs, Jörgs Steinbach, have once again announced the search for…→2020 Innovation Award: Apply by 22th June!

-

Facts & Events

© Beuth Hochschule / Karsten Flügel

Facts & EventsBuildings, lecture halls and laboratories in the Brain City Berlin are currently closed to students, teachers and researchers due to the corona…→

© Beuth Hochschule / Karsten Flügel

Facts & EventsBuildings, lecture halls and laboratories in the Brain City Berlin are currently closed to students, teachers and researchers due to the corona…→COVID-19: Berlin universities are helping

-

Facts & Events

©FMP/ Barth van Rossum

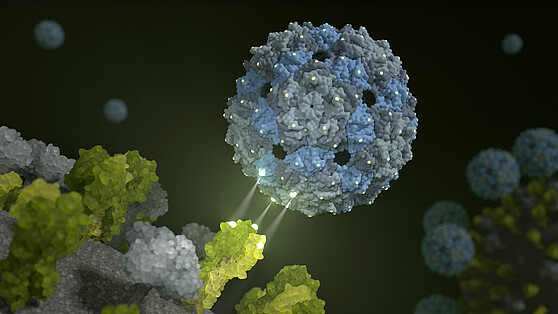

Facts & EventsUsing viruses against influenza, avian flu - and maybe soon also against Corona? An alliance of scientists from the Leibniz-Forschungsinstitut für…→

©FMP/ Barth van Rossum

Facts & EventsUsing viruses against influenza, avian flu - and maybe soon also against Corona? An alliance of scientists from the Leibniz-Forschungsinstitut für…→Berlin research alliance develops influenza inhibitor

-

Facts & Events

©TU Berlin Pressestelle / Dahl

Facts & EventsThe coronavirus pandemic carries many risks, not only for individuals, but also for society. But how are the risks being perceived? And how are people…→

©TU Berlin Pressestelle / Dahl

Facts & EventsThe coronavirus pandemic carries many risks, not only for individuals, but also for society. But how are the risks being perceived? And how are people…→Risk perception in the coronavirus crisis: a survey from TU Berlin

-

Facts & Events

![[Translate to English:] ©Marcel/Unsplash [Translate to English:] Fledermaus Grafiti](/fileadmin/_processed_/f/a/csm_1920x1080-fledermaus-marcel-unsplash_1ee08293c8.jpg) [Translate to English:] ©Marcel/Unsplash

Facts & EventsBe it the greater mouse-eared bat, the brown long-eared bat, the serotine bat or the common noctule – bats feel particularly at home in Berlin. 18 of…→

[Translate to English:] ©Marcel/Unsplash

Facts & EventsBe it the greater mouse-eared bat, the brown long-eared bat, the serotine bat or the common noctule – bats feel particularly at home in Berlin. 18 of…→Until 8th of March: Bat Capital seeks hobby researchers

-

Facts & Events

© Falling Walls Foundation

Facts & EventsWhether it is Berlin Science Week, Long Night of the Sciences or the Greentech Festival - the Berlin Science Year 2020 promises to be at least as…→

© Falling Walls Foundation

Facts & EventsWhether it is Berlin Science Week, Long Night of the Sciences or the Greentech Festival - the Berlin Science Year 2020 promises to be at least as…→Brain City Berlin 2020: 10 Event Highlights

-

Facts & Events

©TU Berlin Pressestelle

Facts & Events“Berlin Institute for the Foundations of Learning and Data” (BIFOLD) is the new organisation that the Berlin Big Data Center (BBDC) and the Berlin…→

©TU Berlin Pressestelle

Facts & Events“Berlin Institute for the Foundations of Learning and Data” (BIFOLD) is the new organisation that the Berlin Big Data Center (BBDC) and the Berlin…→AI Beacon for Berlin: Millions in funding from the German Federal Government and State of Berlin

-

Facts & Events

shutterstock © andersphoto

Facts & EventsBerliners win six high-ranking research awards: a total of five outstanding scientists received awards from the European Research Council. And Berlin…→

shutterstock © andersphoto

Facts & EventsBerliners win six high-ranking research awards: a total of five outstanding scientists received awards from the European Research Council. And Berlin…→Six awards announced for Berlin scientists

-

Facts & Events

©IFAF

Facts & Events22.11.2019 | Interdisciplinarity is a central characteristic of science and research in Berlin. This was most recently proven when the Berlin…→

©IFAF

Facts & Events22.11.2019 | Interdisciplinarity is a central characteristic of science and research in Berlin. This was most recently proven when the Berlin…→IFAF Berlin marks 10th anniversary

-

Facts & Events

©ADN Broadcast

Facts & Events31.10.2019 | For the second time, a Falling Walls Lab was held in Tunis, the capital of Tunisia, on 21 September 2019. The event was organized by…→

©ADN Broadcast

Facts & Events31.10.2019 | For the second time, a Falling Walls Lab was held in Tunis, the capital of Tunisia, on 21 September 2019. The event was organized by…→Brain City Ambassador organizes 2nd Falling Walls Lab in Tunis

-

Facts & Events

©Berlin Science Week 2019

Facts & Events23.10.2019 | Over 350 top researchers from all over the world, more than 130 events, and a new format: the "Berlin Science Campus". Berlin Science…→

©Berlin Science Week 2019

Facts & Events23.10.2019 | Over 350 top researchers from all over the world, more than 130 events, and a new format: the "Berlin Science Campus". Berlin Science…→10 days dedicated to science: Berlin Science Week 2019

-

Facts & Events

©Marvin Meyer/Unsplash

Facts & EventsMore students than ever before are expected to be enrolled at the colleges and universities in the Brain City Berlin by the winter semester 2019/20.…→

©Marvin Meyer/Unsplash

Facts & EventsMore students than ever before are expected to be enrolled at the colleges and universities in the Brain City Berlin by the winter semester 2019/20.…→Start of the semester: 195,000 students expected | 17.10.2019

-

Facts & Events

Fu Berlin / Peter Hirnsel

Facts & EventsBerlin is a cosmopolitan metropolis. People from more than 190 countries live and work in the city. More and more young, talented people who would…→

Fu Berlin / Peter Hirnsel

Facts & EventsBerlin is a cosmopolitan metropolis. People from more than 190 countries live and work in the city. More and more young, talented people who would…→Berlin universities are extraordinarily international | 10.10.2019

-

Facts & Events

UVB 2019 / André Wagenzik

Facts & EventsBrain City Berlin is considered one of the leading locations in Germany working on artificial intelligence. Berlin science is an important driver of…→

UVB 2019 / André Wagenzik

Facts & EventsBrain City Berlin is considered one of the leading locations in Germany working on artificial intelligence. Berlin science is an important driver of…→Accelerating the digital transformation: TU Berlin and business associations intensify cooperation | 02.10.2019

-

Facts & Events

© ESMT Berlin/Fotografin: Annette Korrol

Facts & EventsAlso a great outcome for Brain City Berlin: Özlem Bedre-Defolie, Associate Professor of Economics at the ESMT Berlin international business school has…→

© ESMT Berlin/Fotografin: Annette Korrol

Facts & EventsAlso a great outcome for Brain City Berlin: Özlem Bedre-Defolie, Associate Professor of Economics at the ESMT Berlin international business school has…→On the trail of dominant platforms: ESMT Professor receives around 1.5 million euro in grant funding | 11.09.2019

-

Facts & Events

©Futurium/David von Becker

Facts & EventsAlmost everyone's occupied with the future, because it's going to affect all of us. The Futurium, which opened yesterday in Brain City Berlin, invites…→

©Futurium/David von Becker

Facts & EventsAlmost everyone's occupied with the future, because it's going to affect all of us. The Futurium, which opened yesterday in Brain City Berlin, invites…→Creating public space for thinking in Brain City Berlin: the opening of the Futurium | 06.09.2019

-

Facts & Events

![[Translate to English:] Peitz/Charité [Translate to English:]](/fileadmin/_processed_/e/8/csm_gabrysch-peitzsch-charite_558x314_5e9e7fccac.jpg) [Translate to English:] Peitz/Charité

Facts & EventsThe Brain City Berlin once again gets top-class new scientists: The medician and epidemiologist Prof. Dr. Dr. Sabine Gabrysch is the first female…→

[Translate to English:] Peitz/Charité

Facts & EventsThe Brain City Berlin once again gets top-class new scientists: The medician and epidemiologist Prof. Dr. Dr. Sabine Gabrysch is the first female…→First nationwide professorship for climate change and health in Berlin | 26.06.2019

-

Facts & Events

![[Translate to English:] Ottobock [Translate to English:]](/fileadmin/_processed_/a/6/csm_ottobock-filigranes_greifen_mit_bebionic_558x314_b7920c94de.jpg) [Translate to English:] Ottobock

Facts & EventsThe last school day in Berlin is over. Finally summer holidays! But some students might get bored while swimming all day in the Havel or the…→

[Translate to English:] Ottobock

Facts & EventsThe last school day in Berlin is over. Finally summer holidays! But some students might get bored while swimming all day in the Havel or the…→Clever throughout the summer holidays | 19.06.2019

-

Facts & Events

![[Translate to English:] Florian Reimann [Translate to English:]](/fileadmin/_processed_/3/d/csm_CityLab_Nicolas_Zimmer_und_Michael_Mu___eller_58x314_d065119257.jpg) [Translate to English:] Florian Reimann

Facts & EventsThe future of the city: what could it look like? That's what Berlin's CityLAB is busy thinking about and researching. The Berlin experimental…→

[Translate to English:] Florian Reimann

Facts & EventsThe future of the city: what could it look like? That's what Berlin's CityLAB is busy thinking about and researching. The Berlin experimental…→Laboratory for the digitalization of Berlin: CityLAB opens | 13.06.2019

-

Facts & Events

![[Translate to English:] chris knight/unsplash [Translate to English:]](/fileadmin/_processed_/0/4/csm_chris-knight-458508-unsplash_558x314_334166e690.jpg) [Translate to English:] chris knight/unsplash

Facts & EventsBerliners with foreign roots are extremely innovative. According to a short report by IW, the Cologne Institute for Economic Research, 16.1% of the…→

[Translate to English:] chris knight/unsplash

Facts & EventsBerliners with foreign roots are extremely innovative. According to a short report by IW, the Cologne Institute for Economic Research, 16.1% of the…→Berliners with a migration background especially inventive | 29.05.2019

-

Facts & Events

![[Translate to English:] TU Berlin/Dahl [Translate to English:]](/fileadmin/_processed_/5/1/csm_Freier_Dialog_TU_Berlin_Dahl_558x314_1b3036ca47.jpg) [Translate to English:] TU Berlin/Dahl

Facts & EventsBerlin is one of the most exciting centers for science and research in the world. Brain City Berlin is characterized by international and…→

[Translate to English:] TU Berlin/Dahl

Facts & EventsBerlin is one of the most exciting centers for science and research in the world. Brain City Berlin is characterized by international and…→Brain City Berlin: in free dialog with the world | 13.05.2019

-

Facts & Events

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/3/e/csm_University-Ranking_Berlin-Partner_Scholvien_558x314_2e98a4d4aa.jpg) [Translate to English:]

Facts & EventsIn the globally recognized QS University Ranking, Berlin's universities performed very well in many disciplines. They are 30 times among the top 50…→

[Translate to English:]

Facts & EventsIn the globally recognized QS University Ranking, Berlin's universities performed very well in many disciplines. They are 30 times among the top 50…→QS World University Ranking 2019 - Berlin Universities are among the world's best in many disciplines | 21.03.2019

-

Facts & Events

![[Translate to English:] the climate reality project/usplash [Translate to English:]](/fileadmin/_processed_/5/0/csm_the-climate-reality-projec-unsplash_558x314_9054a3fe7d.jpg) [Translate to English:] the climate reality project/usplash

Facts & EventsAlready for the third time the international conference "I, Scientist" takes place in the Brain City Berlin. From 20 to 21 September 2019, students…→

[Translate to English:] the climate reality project/usplash

Facts & EventsAlready for the third time the international conference "I, Scientist" takes place in the Brain City Berlin. From 20 to 21 September 2019, students…→Register now! "I, Scientist" - Gender Conference for Women Scientists | 25.07.2019