-

BHT/Martin Gasch

01.02.2022“Robots Can Save us a Great Deal of Work”

Whether it’s cleaning, manufacturing, service or care facilities – robots are becoming part of our daily lives. Together with his team at the Human.VR.Lab, Dr-Ing. Ivo Boblan, Professor of the Humanoid Robotics Study Programme at the Berliner Hochschule für Technik (BHT), is researching how humans and technology can interact more effectively in future. One focus of his work: Human acceptance of robots. What are the requirements for us to be able to communicate with robots? And where does our acceptance of them waiver? The Brain City Ambassador and speaker for the research group Humanoid Robotics and Human-Technology Interaction (HARMONIK) tells us more in this Brain City interview.

Prof. Dr Ing. Boblan. You are one of the most renowned robot researchers in Germany. What fascinates you about this topic?

I grew up with automation, with technology that moves. Even during my school years, I’d be soldering and assembling mechanical things. I was determined to study robotics. Back then, it was called automation technology. The robots I dealt with during my degree at the TU Dresden, and later at the TU Berlin, were still very rudimentary in terms of their functions and were very awkward to program. But even back then, in the early 1990s, I knew that this was the future.”

What makes robots so exciting as a research area?

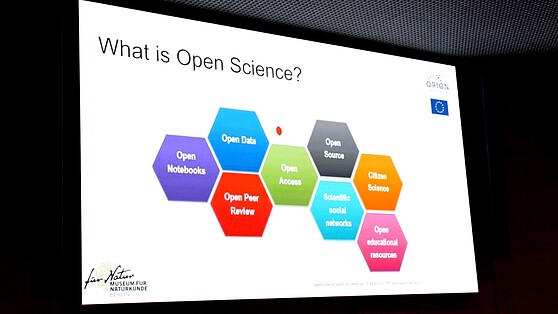

Robots can save us a great deal of work. They can work for us non-stop. And they work far more accurately than we do. Today’s technology is naturally far more advanced than it was back in the 1990s. We’re no longer simply dealing with articulated or SCARA robots, which are the typical robots used in industry. Research is now headed towards humanoid robots. They have elbows, shoulders, knees and other limbs, with greater freedom of movement as a result. The exciting thing about them is that they can be programmed using freely available (open-source) software such as ‘GitHub’ and high-level language such as ‘Python’ to control and move them as needed. This is also easy and quick for students to learn. From as early as their second semester of our Humanoid Robotics course, students collaborate with PhD students on a robotic topic.

Robots are becoming more involved our daily lives: What are the developmental challenges you face here?

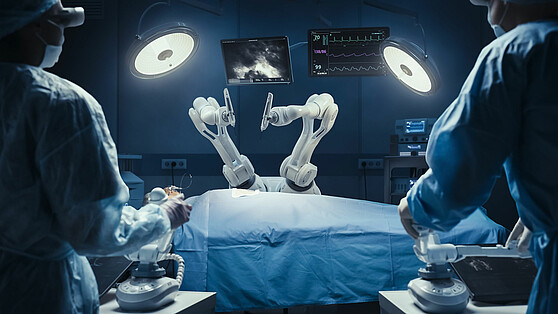

It’s neither enjoyable nor healthy for humans to perform monotonous tasks for hours on end. Robots, on the other hand, are great at this. For example, industrial robots in the automobile industry have been reliably and precisely welding and painting for years. The challenge now lies in developing robotic systems that can ease the everyday burden on humans, whether at home or in care facilities. They should be able to support physiotherapists by lifting and carrying patients. Or perhaps by helping older people with objects that are difficult to reach, such as taking cups off a shelf. These involve physically demanding routine activities. What's important is that talking, listening and other social interactions should still be undertaken by humans. However, the basic question is what do humans still want to do in future and which tasks would be better performed by robots?

Your research also focusses on the topic of soft robotics: to what extent should robots adapt their behaviour towards humans?

In order for robots to become involved in our daily lives in future, they’ll need to be soft and flexible in their movements. They must adapt to our characteristics. This is the only way we’ll accept them and feel comfortable around them. Modern industrial robots still move in a very stiff fashion. This is strange to us humans as we naturally have flexible movements. In concrete terms, this means that we need to make the motors and limbs softer in robots of the future. Flexible pneumatic actuators such as fluid muscles have been around on the market for a while now. They are inherently flexible and, when paired together, can generate soft movements that resemble human limbs in robotic systems. In the near future, robots will be so cheap that almost everyone will be able to afford one. The question is will we accept them? This will only happen if they are as human-like as possible. And by this I don’t mean that they look humanoid – with skin, hair and a face – but rather that they act humanoid.

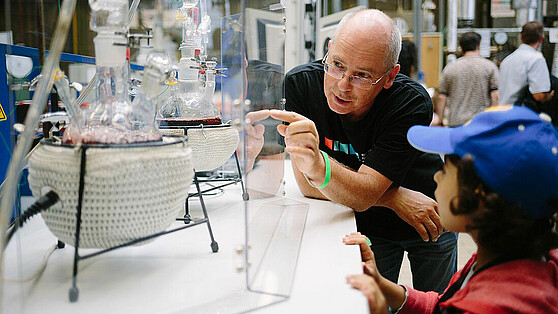

Prof. Dr.-Ing. Ivo Boblan with his team and robot "Digit".

What should the robots look like then? In Berlin, the popular “Myon” robot developed by the Neurorobotics Research Laboratory of your colleague Prof. Dr Manfred Hild has a humanoid appearance. Doesn’t that contradict what you just said?

No, not at all. Myon has two arms, two legs and something that resembles a head. But he is small, can’t carry much, has electric motors in his joints and his fingers don’t move. He also doesn’t have skin, hair or a human face. He can be identified as a work of technology right away. When I talk about humanoid robots, I mean those which could be mistaken for humans from a distance. Such as the AMECA AI ROBOT recently presented at CES 2022 in Las Vegas or ‘Geminoid HI-1’, Hiroshi Ishiguro’s robot twin. If robots are too similar to us, we find them creepy. Using the uncanny valley hypothesis developed by Japanese roboticist Masahiro Mori back in 1970, our acceptance of robots that appear almost human drastically sinks, eliciting eerie feelings. In order for a robot to be accepted, it shouldn’t have a head. A speech display could suffice. However, all of these questions remain pretty much unanswered. They can, or even must, be answered by the very people who want to interact with them.

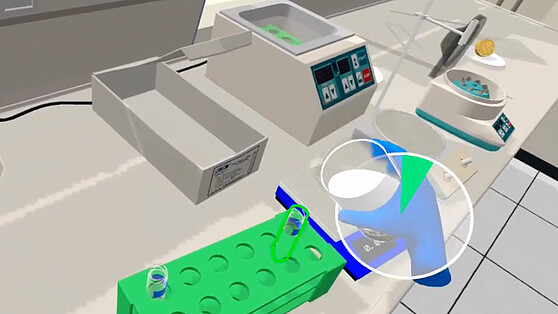

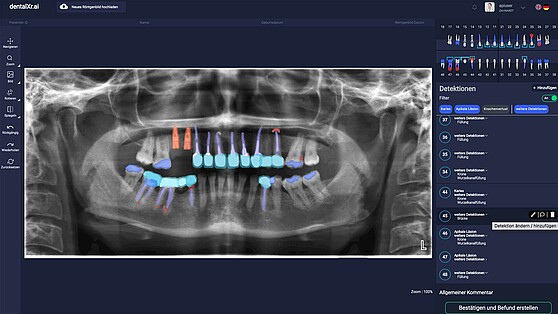

In the BHT’s HUMAN.VR.LAB, you’re testing robots using VR/AR and AI in various application areas. Can you tell us what they are?

Our Human.VR.Lab is a well-equipped laboratory where we carry out movement analyses, for example. Here, we attach markers to human joints to track their movements. We then transfer these movement patterns to the robots. In doing so, we’re trying to make our robot digit move in a more human-like way. We can also integrate other human characteristics by using virtual and augmented reality (VR/AR). This allows us to test the degree of acceptance without actually having to build the entire robot. This is far quicker and cheaper. We’ll also be working with technology sociologists at the Human VR Lab to better understand how we can increase our enjoyment of interacting with robots.

Can you give an example?

If a robot holds out its hand at an angle of 30 degrees, people spontaneously decide whether to “repair” this action or not. We then either take the hand and accept this deviation in movement - or not. The question here is at what position or to which degree of deviation from expectations do people still feel addressed and at what point do they ignore the extended robot hand? What do we need to change in the technology so that people forgive as many errors of the “unsatisfactory” robot as possible and repair the interaction? We’re focussing on this topic as part of my MIT-engAge young research group at the TU Berlin, which is sponsored by the BMBF.

In contrast, the ‘EPI’ project deals with exoskeletons - so with human support structures, right?

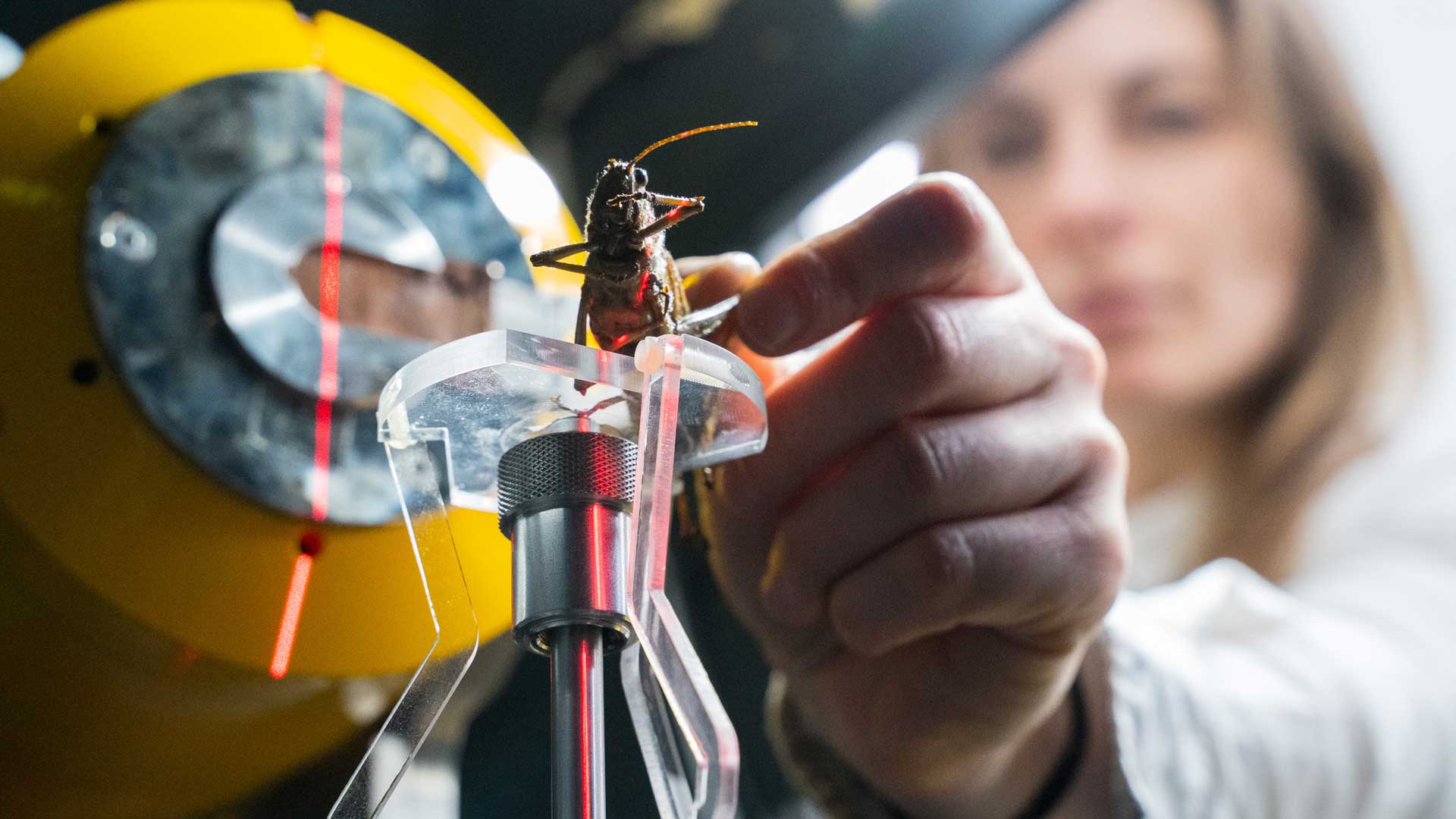

Yes, exactly. Together with the HTW University of Applied Sciences (HTW Berlin) and multiple industrial partners, we’ve developed two passive exoskeletons based on a grasshopper leap as part of a project sponsored by the IFAF Berlin, which is currently in its extension phase. It is designed to mechanically help humans lift and transport heavy objects. It’s not about giving us superhuman abilities: it’s more about reducing back pain, slipped discs, knee problems and symptoms of fatigue when working under high physical strain, or mechanically supporting people with a limited range of motion. The exoskeletons developed as part of the project are currently being tested by our project partners in real-life workplaces.

What is the objective of the BHT’s research group ‘Humanoid Robotics and Human-Technology Interaction (HARMONIK)’, for which you are now a speaker?

At the end of 2020 we brought two research groups to life at the BHT: ‘Data Science +X’ deals with learning behaviour, data mining and data quality, among other things. With HARMONIK, we want to optimise interactions between humans and robots using existing robot systems. This happens on different levels. On the one hand, we’re currently undertaking bachelor work that is researching how to intuitively communicate with a robot, such as asking it to lift a box: Do you talk to it, control it with a joystick or look at it while pointing towards the box? On the other hand, we want to improve the interactive experience and change robot systems without expensive and time-consuming processes. We achieve this using VR/AR - as previously mentioned - by compensating for the robot’s shortcomings such as a head or delicate hands using virtual augmentation and testing this combined real life and virtual world in interactions between humans and robots. Of course, I can’t do all this alone and work in close cooperation with my colleagues, Prof. Dr Kristian Hildebrand, Prof. Dr-Ing. Joachim Villwock and Prof. Dr-Ing. Hannes Höppner. 15 professors from the fields of social sciences, life sciences, IT, robotics and engineering work together as part of the group. Data Science +X and HARMONIK work in close partnership to research and teach at the Human VR Lab to bring technology closer to people. Another goal of HARMONIK is to consolidate the Human VR Lab. To create a place where all players work together on an inter-university basis to research and teach about human-centred technology. A major advantage of Berlin is that things can be quickly implemented here since we’re not following old methods. Also, because there are lots of people here who want to explore and develop new ideas and tools.

The title of Lunchtalk #08 by the BHT is called “How will humans and robots communicate in future?” Do you have an answer to this?

There will always be a difference between the sensors in technology and humans. We’ve got our five traditional senses; technology has a camera, speaker and microphone. Ideally, we’ll talk to the technology because it’s the easiest. But we’re primarily emotionally characterised by our sense of touch and feel. In the near future, there will be tools that allow us to feel and create a feeling of proximity despite distance, such as in video calls. We’ll presumably also be able to smell from a distance. These are emotional connections that we humans need. Us researchers are working on creating robots that communicate using all of the human senses. (vdo)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/9/d/csm_bwasihun-vdo_558x314_c0d384ce60.jpg)

![[Translate to English:] Berlin University Alliance/Matthias Heyde [Translate to English:]](/fileadmin/_processed_/5/a/csm_Berlin_University_Alliance_Matthias_Heyde-558x314_4bc591ca3c.jpg)

![[Translate to English:] David Ausserhofer/IGB [Translate to English:]](/fileadmin/_processed_/6/f/csm_Hupfer__Michael_____R__David_Ausserhofer_588x314_6fef164e57.jpg)

![[Translate to English:] Helena Lopes / Unsplash [Translate to English:]](/fileadmin/_processed_/b/6/csm_helena-lopes-1338810-unsplash_558x314_857802ad2f.jpg)

![[Translate to English:] HZB/M. Setzpfandt [Translate to English:]](/fileadmin/_processed_/f/a/csm_LNDW_HZB_558x314_e1e3500ed5.jpg)

![[Translate to English:] Tim Landgraf [Translate to English:]](/fileadmin/_processed_/0/7/csm_Car2CarEnergySharing_Tim_Landgraf_558x314_485bf716e9.jpg)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/b/6/csm_Open-Access_Berlin-Partner_Wu__stenhagen_558x314_dd0c6e714d.jpg)

![[Translate to English:] Thomas Rosenthal - Museum für Naturkunde Berlin [Translate to English:]](/fileadmin/_processed_/6/d/csm_Museum_fu___er_Naturkunde_Berlin_Thomas_Rosenthal_f11b8ba056.jpg)

![[Translate to English:] [Translate to English:]](/fileadmin/_processed_/f/c/csm_TU_Berlin_Cem_Avsar_558x314_4b07bcb055.jpg)