Fish, bees, and self-driving cars

07.06.2019 | Brain City Berlin is considered one of the leading locations in Germany working on artificial intelligence. About 30% of all German AI companies are located in the capital and almost half of the AI start-ups. An essential driver of this development: numerous institutions and universities are conducting high-level research in the development of innovative applications and solutions. For example, at the Dahlem Center for Machine Learning and Robotics (DCMLR): Among other things, they are working on making self-driving vehicles better. They're also using robots and AI to learn about bees and fish.

The little guppy looks like any other: just an inch or two long, with big eyes with black pupils, and metallic skin. But that's where the similarity to a real fish stops. Because this little guppy is not a living being of flesh, blood, and bones; instead, it is a robofish: It "swims" along a fine, transparent line through a square, flat test basin, controlled by a robot below the base plate, which, in turn, is controlled by software telling it where to move.

Sometimes the robofish steers towards a small group of real guppies in the pool, sometimes they follow him. Two cameras follow the action and record their movements.

Social bonding with the robofish

As can be easily guessed, the robofish is not there to entertain the fish in the tank. Instead, it's the star of a research project at the Biorobotics Lab at the Dahlem Center for Machine Learning and Robotics (DCMLR) of the Free University of Berlin (FU Berlin). "At DCLMR, we use robots and AI to explore the biological intelligence of fish and bees," explains Dr. Tim Landgrave. As a professor of computer science, he leads the robofish project and the Artificial and Collective Intelligence working group. Purpose of the project: the robots should learn from the fish. They should intelligently adapt their behavior to the school of fish so that the fish will follow them. For this purpose, the movement data is collected, analyzed, and used to calculate movement patterns in the tank.

Tim Landgraf and his team have already achieved initial successes: "It's been shown, for example, that the guppies follow a robofish about four times longer, when it takes into account the reactions of the other fish in its behavior. For example, when fish are dodging around nervously, and the robot approaches them less aggressively, the fish build trust in it, creating a social bond that translates into longer leadership."

Bees navigate strongly across the view

In their work with bees, Tim Landgraf and his team want to find out how they communicate. In the long term, it is also important to incorporate the data and insights gained into the development of new technologies. "We don't even know exactly how bees orient themselves. How their brain depicts the structure of the world," says Tim Landgraf.

In the NeuroCopter project, for example, the researcher and his colleagues have been able to prove that bees largely navigate using their sight: bees caught by a quadrocopter at a feeding site and released about a ¼ mile away still manage to find the shortest way home to their hive. They hang on a thin wire so that they have a clear view of the world below them. Apparently, the bees have images of landmarks in their memory. This allows them to navigate home faster than a control group in an opaque box. Later, Landgraf and his colleagues were able to measure the bees' brainwaves via electrodes to obtain the first clear signals from nerve cells of bees in flight. Currently, researchers at the DCMLR are tracking the bees of a hive on the premises of the institute in order to learn more about their behavior over bee generations and to translate this knowledge into the development of intelligent technologies.

The AI used today is still weak

Although research in the field of artificial intelligence is making rapid progress through projects such as these, truly autonomous systems and machines will not be common anytime soon. This is confirmed by Professor Raúl Rojas.

The specialist in artificial neural networks founded the AI working group at FU Berlin in 1989 and thus laid the foundations for the work of the Dahlem Center for Machine Learning and Robotics. "The artificial intelligence used today is purely data-driven, so-called weak AI. We are still a long way from strong, self-learning, and acting AI," the AI expert says, adding: "Artificial intelligence can currently be nothing more than an assistance system for humans, because data-driven approaches work differently than the human brain. We still have to do the mental work."

Autonomous vehicles will come with certainty

Alexa, Siri, and Roomba: weak AI can be found in almost every household. Artificial Intelligence helps physicians compare X-rays and blood counts and many factories already use robots. Intelligent parking and driving assistance systems are already integrated into most cars today. But the use of strong AI is still a long way off. "Autonomous vehicles will surely come. The question is when. Personally,

I think it will take at least 20 more years before we see self-driving cars in the streets," said Professor Daniel Göhring, who heads the Autonomous Vehicles Working Group at DCMLR together with Raúl Rojas.

"MadeInGermany:" the autonomous test vehicle

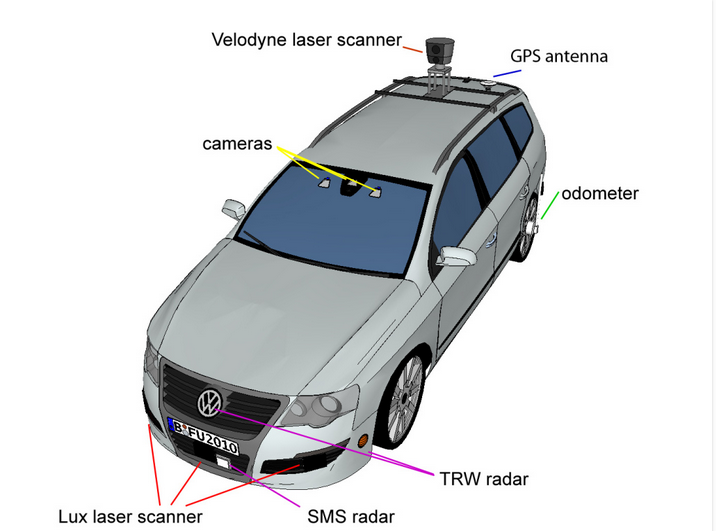

Daniel Göhring speaks from experience. Since 2011, both an electric car and a Passat converted into autonomous vehicles in cooperation with VW research have been traveling on defined test tracks through Berlin. One of these is the test field of the Berlin " Safe Automated and Connected Driving" (SAFARI) research and development project at digital city traffic test field in Berlin-Reinickendorf.

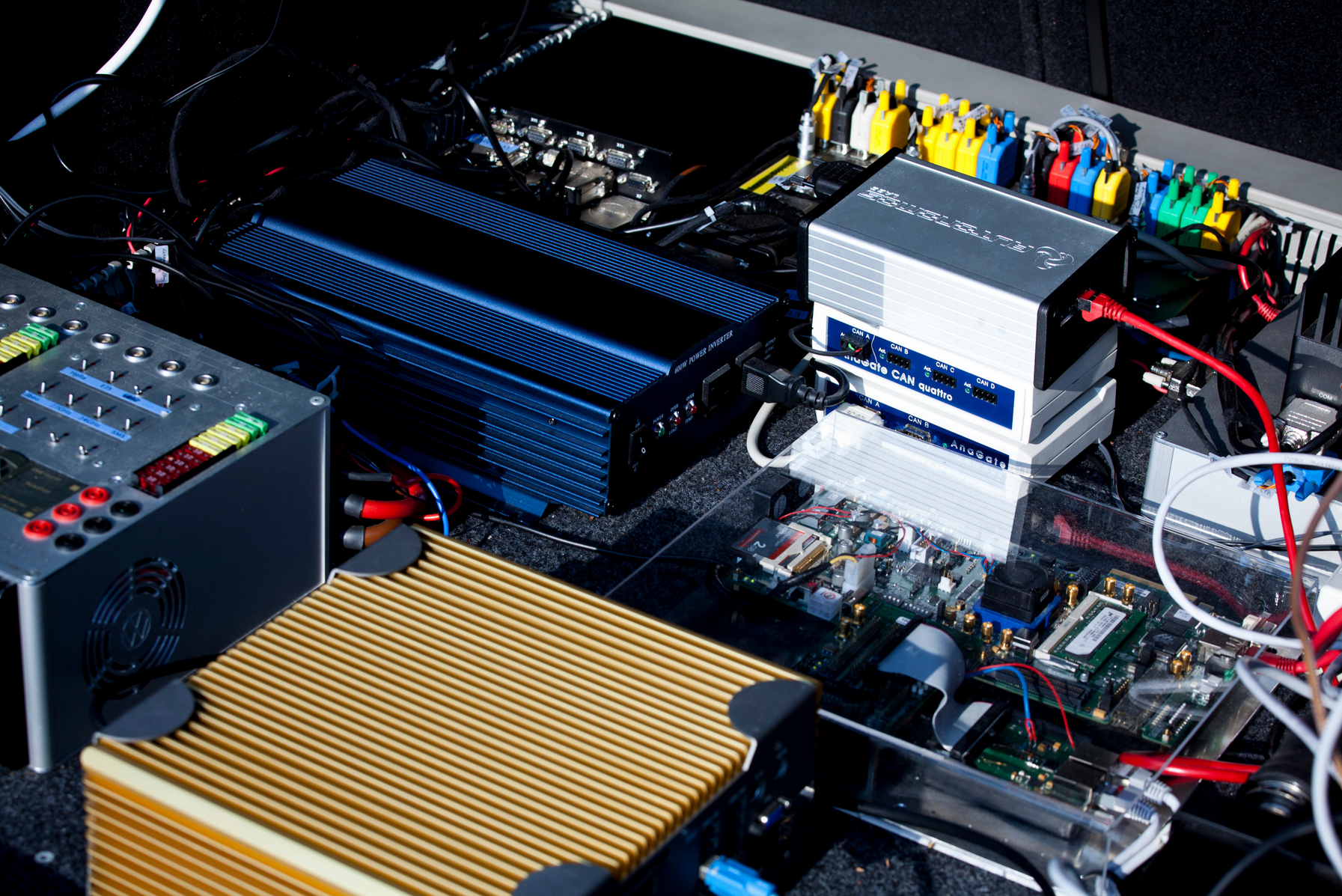

The Passat named "MadeInGermany" is equipped with laser sensors, cameras, GPS, accelerometers, and radar. So-called sensor fusion, which compares data from different sensor sources in real time, allows the car to drive autonomously and react quickly and precisely to control commands from the computer.

Trees as additional landmarks

In addition to GPS data, the vehicle orients itself primarily on distinctive road markings such as trees. The car can already communicate with the traffic lights in the Reinickendorf test field via a communication interface. Nevertheless, for safety reasons, there is a driver on hand ready to take the wheel at any time. In addition, a co-pilot always has the calculations of the central computer in view while driving.

"What our vehicle cannot yet perfect is improvising, for example, finding the right way when confronted with construction or detours despite changing or missing markings," said Raúl Rojas. "MadeInGermany" also doesn't yet recognize and interpret the gestures and intentions of other road users.

Recharging like bees

Maybe AI research into autonomous driving will be able to learn from the bees, too. There are already some initial approaches in the field of electromobility. Because, as the researchers in Berlin-Dahlem have found out, hungry bees, who run out of "fuel," occasionally pump other bees for honey. This principle could well be transferred to e-cars: by hooking up cars with an empty battery to ones with a full charge. This would be extremely useful, and not just only in traffic jams. (vdo)